Agentic AI for data analysis in Pigment

Agentic AI, in which one or multiple AI agents can pursue goals autonomously or collaboratively, represents a new frontier in generative AI. Unlike traditional large language models (LLMs) that operate independently, these systems combine specialized agents with unique roles to handle complex, open-ended tasks. Here, we’ll explore how Pigment uses this approach for data analysis, empowering clients to gain deeper insights.

Pigment "Insights" feature

At Pigment, we’re developing an AI assistant that performs in-depth analysis on client data, identifying patterns and extracting business insights that would otherwise be difficult to uncover. A typical dataset may consist of a metric (e.g., sales) defined across several dimensions (e.g., region, quarter, product). Common tasks include explaining metric values (e.g., "What contributed to the total cost in 2024?") or investigating differences between figures (e.g., "Why are Q3 sales lower than last year?").

While tools like PandasAI can perform AI-assisted data analysis on a given dataset, our implementation within Pigment’s SaaS app is unique in several ways:

- Targeted Data Access: Rather than pulling in the entire dataset (which is often very large), the AI assistant retrieves specific data projections from Pigment’s backend, focusing only on relevant dimensions.

- Interactive Visualizations: We leverage Pigment’s interactive views (diagrams showing data), providing users with a consistent experience across AI and non-AI features. This consistency removes the need for the assistant to generate visualizations through code.

From the point of view of the Pigment backend, the Insights assistant acts as an independent module, communicating with users in natural language, retrieving data as needed, and generating view structures that are then populated with data by the backend to create final visualizations.

Traditional LLM chaining vs agentic AI

LLM chaining, a more traditional approach for handling complex tasks, relies on a sequence of steps where each subsequent step follows based on predefined rules and previous outputs. At Pigment, we use LLM chaining for certain structured tasks, such as producing visuals that answer a question, like "Show me actual sales vs. budget."

However, for nuanced data analysis (often initiated by an open-ended "why" question), the path to insight is less clear. A classic example is Simpson's paradox, where aggregating data may lead to seemingly contradictory results. Addressing these challenges often calls for a divide-and-conquer strategy — a natural fit for agentic AI, as each agent can tackle segmented tasks independently and efficiently.

Recent advancements in LLMs, including OpenAI's o1 model, are beginning to address more complex logical tasks. Agentic AI extends these capabilities by positioning LLMs as the “brains” of agents that can use specialized tools (such as data retrieval functions or external sources) and collaboratively solve problems. Here, multiple agents work together with back-and-forth interactions, often requiring a supervisory agent to orchestrate these exchanges. This design allows agentic systems using lightweight LLMs to achieve results comparable to, and sometimes surpassing, more advanced non-agent LLMs.

Agent design with LangGraph

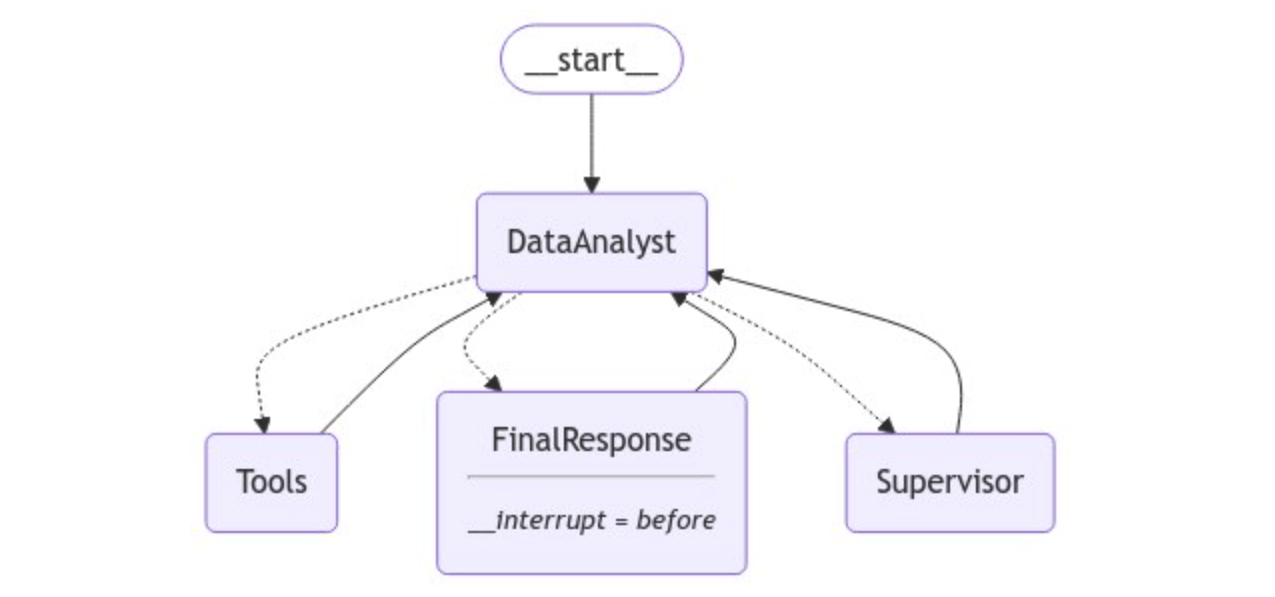

For agent design, we use LangGraph framework, which integrates seamlessly with LangChain and allows for detailed customization of agent interactions. In LangGraph, the agent system is represented as a graph where each agent and tool acts as a node, with specific interaction rules forming the connections.

In our setup:

- The

DataAnalystagent calls the underlying LLM and manages data requests and filter manipulations via its tools. - A human-in-the-loop is incorporated via a special

FinalResponsenode. Even though it's not an executed tool, it is called via the tool calling interface to ensure the output is structured and contains the summary and the suggested next steps. - Currently, a

Supervisoragent ensures each process concludes with a user response, and in the future, it will manage more complex analysis flows and agent handoffs.

A typical agent conversation might look like:

- Human: "Can you help me best understand contributions to the total amount of Product Sales (7430)?"

- DataAnalyst: calls

BreakdownDimensiontool- BreakdownDimension tool: "Breakdown over dimension Quarter: ..."

- BreakdownDimension tool: "Breakdown over dimension Region: ..."

- DataAnalyst: "Here's what I found so far ..."

- Supervisor: "Please call

FinalResponsefunction to finish the conversation." - DataAnalyst: calls

FinalResponsewith a summary: "Q4 24 is the strongest quarter, contributing the highest sales...", next steps: "Focus on the top contributing regions: EUROPE, UK", "Investigate the strong performance in Q4 24" - (next turn) Human: "Focus on Q4 24"

- DataAnalyst: calls

SelectItemstool - etc

The core architecture of the agent graph is outlined in the following Python code:

# Initialize a new State graph (the most common type of graph in LangGraph)

graph = StateGraph(AgentState)

# Define graph nodes

graph.add_node("DataAnalyst", _build_data_analyst_node(llm_model, executed_tools, final_response_tool))

graph.add_node("Tools", _build_tools_node(executed_tools))

graph.add_node("Supervisor", _build_supervisor_node())

graph.add_node("FinalResponse", _build_final_response_node())

graph.set_entry_point("DataAnalyst")

# Define conditional edges from the `DataAnalyst` node

graph.add_conditional_edges(

source="DataAnalyst",

path=_route_from_data_analyst,

path_map={"Tools": "Tools", "FinalResponse": "FinalResponse", "Supervisor": "Supervisor"},

)

# Define non-conditional edges that route back to the `DataAnalyst` node

graph.add_edge(start_key="Tools", end_key="DataAnalyst")

graph.add_edge(start_key="FinalResponse", end_key="DataAnalyst")

graph.add_edge(start_key="Supervisor", end_key="DataAnalyst")

# Compile graph and add a breakpoint before the `FinalResponse` node - we will manually inject the user input to the state and relaunch the graph.

compiled_graph = graph.compile(interrupt_before=["FinalResponse"])

The _build_node functions contain the internal logic of each node, while the _route_from_data_analyst function manages the conditional switching between nodes.

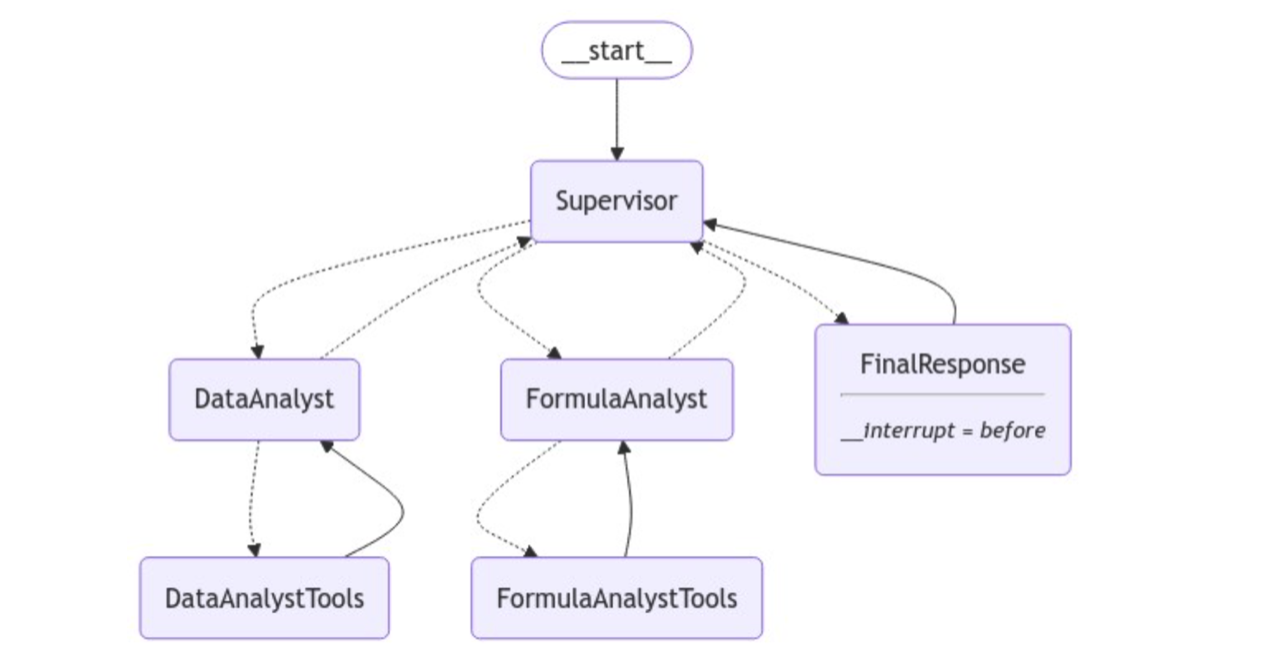

Moving toward multi-agent systems

Agentic AI is particularly effective when tasks can be divided, with specialized agents handling each part. This division of labor prevents overloading an LLM with complex prompts. In Pigment, data often involves interconnected metrics linked by formulas written in Pigment’s language. A FormulaAnalyst agent could assist in analyzing input metrics' impact by retrieving relevant documentation and applying specialized tools. DataAnalyst and FormulaAnalyst can work independently on their tasks, reporting to the Supervisor node that becomes the graph entry point. This modular approach allows for future agents to handle tasks like outlier detection or time-series analysis.

Here is a conversation addressing the Simpson's paradox (comparing two treatments for kidney stones):

- Human: "Can you help me best understand why the success rate is higher for Treatment B?"

- Supervisor: calls

FormulaAnalyst - FormulaAnalyst (after calling its tools): "The formula calculates the success rate by dividing the summed

Succeededmetric by the summedTrialsmetric, after removing theStone_sizedimension from both metrics." - Supervisor: calls

DataAnalyst - DataAnalyst (after calling its tools): "Considering the above, the observed higher overall success rate for Treamtent B is due to a disproportionate number of trials with small stones, which have a generally higher success rate, creating a bias when comparing treatments".

- Supervisor: calls

FinalResponsesummarizing the findings.

Takeaways

Our experience with agentic AI has been promising, especially for handling complex data analysis tasks. However, this approach presents both advantages and challenges:

- creativity vs consistency: Generative AI enhances creativity, enabling novel insights and perspectives, though it occasionally brings an element of unpredictability. Using agents rather than standalone LLMs provides added flexibility, but it may also increase unpredictability. At Pigment, our backend safeguards data integrity in views, and we are considering adding a verification agent to further reinforce accuracy in generated text.

- scope management: The assistant suggests next steps, which sometimes extend beyond its intended scope. During lengthy conversations, it may recommend broader business optimizations, which we consider a bug rather than a feature (we plan a separate Pigment assistant for business knowledge questions). System prompt adjustments help refine this scope but require continuous fine-tuning.

- debugging and testing: Testing agentic systems can be complex due to multiple agents and tools. Automated testing is challenging as responses aren’t always strictly correct or incorrect, though we can test for expected elements like view generation and analysis flow.

Overall, agentic AI provides the adaptability needed for detailed data analysis and shows significant potential as we continue to add new agents and functionalities.